Tokens, Explained without Tylenol

Quick sanity check: we are not talking about the clunky arcade tokens at Chuck E. Cheese. Those tokens buy you a couple rounds of skee ball; our tokens buy AI attention. Tokenization is simply the step where an LLM chops your text into small pieces called tokens so it can convert them into numbers and get to work. Sometimes a token is a whole word, sometimes a chunk of a word, sometimes a single character. Spaces and punctuation often count. A practical rule in English: about four characters per token, roughly three quarters of a word. If you have ever watched a kid vaporize a cup of arcade tokens, you already understand why this matters for cost.

Three real‑world examples you will recognize

Here is the simple idea in plain English. Some tokenizers treat a whole word as a single token, others break a word into a couple of pieces, and a few count every character. If the Chuck E. Cheese arcade used the same rules, one game would take one token, another would take two half tokens, and a super picky machine would charge per click. Same arcade, different meters.

Full‑word tokens. Easy to grasp and useful in basic setups. The downside shows up with names, slang, or specialized jargon where the vocabulary balloons.

Examples:

"Quarterly revenue grew 12 percent." → Quarterly | revenue | grew | 12 | percent

"Chicago-based" → Chicago | based

Subword tokens. Modern LLMs often split words into meaningful chunks like “market” and “ing.” That keeps the vocabulary compact and helps models handle new or rare terms without getting confused.

Examples:

"marketing" → market | ing

"profitability" → profit | abil | ity

"McDonald’s" → Mc | Donald | ’s

Character‑level tokens. Every character becomes a token. This is flexible for code or unusual spellings, but it burns more tokens to say the same thing. Use when you need total granularity.

Examples:

"AI!" → A | I | !

"A/B test" → A | / | B | | t | e | s | t

"2025-10-15" → 2 | 0 | 2 | 5 | - | 1 | 0 | - | 1 | 5

Note: Exact token splits vary by model and tokenizer; these examples are conceptual.

Why tokenization matters most: it drives your bill

Let’s talk about the bill like you’d explain an arcade tab to a friend. You don’t pay for “a question” or “an answer.” You pay for the tokens your text becomes on the way in and the tokens the model spends on the way out. Longer prompts, large context windows, or chatty responses quietly run the meter. Think of it like Chuck E. Cheese for software: every game eats a few tokens, and the more you play, the higher the total climbs. In short, treat tokens like any other metered unit of work.

Here is the part most teams learn the hard way. Before we get into the examples, a quick heads up: the next three stories show how fast token use can snowball into real dollars. Think of this as the moment you check the arcade balance and realize the cup is almost empty. Watch what happened to others so you can set limits, trim prompts, and avoid the same bill:

A team on Azure OpenAI reported an unexpected $50K bill after traffic hit very high token rates for only a few hours. The math checks out when you run near rate limits.

In the OpenAI community, an assistant fell into a loop and kept calling itself, chewing through tokens until rate limits intervened.

On the brighter side, a startup case study showed 30% token savings from prompt compression and cleanup, turning a looming cost problem into a manageable line item.

Different platforms, same lesson: the meter never forgets.

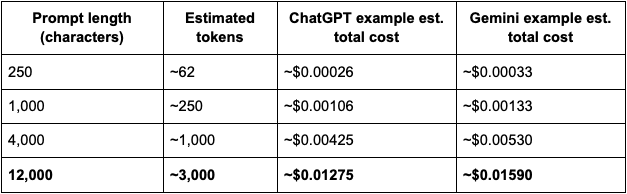

Callout: For policy docs and other long prompts, costs rise linearly with tokens; see the sidebar for a 12,000‑character example.

For current model families in typical enterprise stacks: OpenAI’s ChatGPT models price input and output tokens separately, and Google’s Gemini models do the same. Rates change over time, so always re‑check the live pricing pages for the exact model you use in production. If you want to sleep well, set hard caps and alerts, cap max output tokens, cache reusable context, and prefer the smallest capable model for each step.

Quick sidebar: estimate tokens and dollars before you hit “Run”

Assumptions: English text at ~4 characters per token; response length equals twice the prompt length; example pricing based on current public pages at time of writing.

How to read this: even small prompts are inexpensive per call. Costs scale with volume, response length, and how much reference material you stuff into the context window. Check the vendor pages for the exact SKU you use.

How to keep track of costs by tracking tokenization

If the intro was the arcade, this is the part where you watch the token cup. A little prep and a few guardrails keep the fun going without a surprise bill. Here is a simple, no‑jargon way to stay on top of it:

Estimate before you build. Multiply characters by 0.25 to get token estimates. Add the model’s expected response size. Then apply the vendor’s input and output rates. It is back‑of‑the‑napkin math that prevents surprise invoices.

Measure during execution. Your platform should expose per‑request token counts. Watch for spikes that come from extra context, verbose styles, or unbounded outputs. Treat it like a utility meter.

Budget by workflow, not only by department. Track common flows such as “customer email summary,” “weekly FP&A brief,” or “policy Q&A” with average input and output tokens. Tie those to business outcomes so you can compare cost per deliverable.

Design for token efficiency. Subword tokenizers already help, but prompt design matters more. Remove boilerplate, keep instructions crisp, and narrow the task. The model should not search the ocean when you only want what is in the harbor.

Educate your authors. Give a simple one‑pager to anyone writing prompts. Point them to plain‑English explainers when they want more depth.

Conclusion

Tokenization is not a dark art. It is slicing the baguette so the model can eat at business speed and your budget stays in line. Once your team treats tokens as the unit of work, you can forecast usage, design lean prompts, and track spend like any other operating cost. Start with estimates, watch real counts in production, and keep trimming unnecessary tokens. The result is steadier performance, fewer surprises, and AI that pays for itself.

Sources for further reading